We have been closely monitoring an issue with our servers for the past month. Over the past 30 days, we have been seeing a repeating issue where users were receiving 500 or 429 errors from our servers when making API requests. Upon further investigation, we learned that close to 0.2% of all requests hitting our servers resulted in an error during that period.

After working with the team and the greater RapidAPI community to solve the issue, (now solved!), we wanted to share the root-cause analysis of what happened, and outline our process to prevent it from reoccurring.

Background: The RapidAPI Engine & Redis

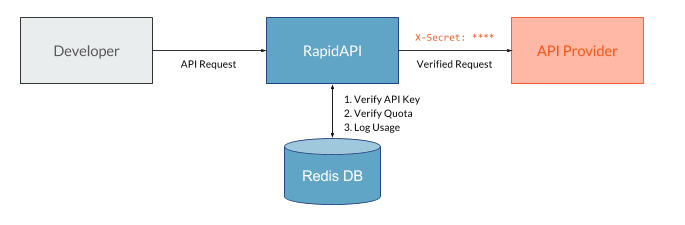

One of RapidAPI’s values to API providers is the ability to validate API requests and keys, and bill developers for API requests. RapidAPI implements an engine that gets requests from developers, verifies them and then forwards them to the actual APIs. This functionality prevents API providers from having to implement that tedious logic, and also lets developers access over 7,500 APIs with a single API key.

The verification process happening on RapidAPI involves 3 key steps:

- Verifying the developer’s API key (against a Redis cache of all keys)

- Verifying the developer’s subscription to the API, as well as their remaining quota

- Log the API request and used quotas asynchronously into a Redis database (as well as a backup log)

This verification process relies heavily on a Redis database cluster, hosted on Redis Labs, which stores API keys and subscriptions, as well as real time subscription utilization data.

Outgrowing Our Database

When we first released RapidAPI, a single Redis cluster hosted on Redis Labs was more than enough for what we needed. It provided quick response times (thanks to Redis’ in-memory nature) and supported a large enough volume of read and write operations.

However, we have enjoyed tremendous growth here at RapidAPI. Just from August this year, we’ve had a 50% increase in daily request volume to RapidAPI, with tens-of-thousands of API requests hitting our servers every week! Unfortunately, the older architecture didn’t keep up with the growth.

The weakest link was networking between RapidAPI and the Redis database. All of our Redis requests went through a single host, and the physical limitations of the networking stack on AWS started failing us (at 100,000-150,000 requests per second we started seeing dropped packets).

Upgrade & Future Plans

To solve the networking issue, we have transitioned to a brand new Redis Cluster, with multiple host and VPC peering to our own AWS installment. This transition is paired with a series of infrastructure and software upgrades to our engine. Overall, this will provide a faster and more robust, scalable platform.

With this change, RapidAPI can scale up horizontally to billions of requests per second with no single point of failure. All of the applicative components and all of the networking components are fully distributed horizontally, letting them scale out big.

Over the next few months, we plan on releasing several other infrastructure upgrades, including deployment of RapidAPI’s engine on multiple data centers around the world, to make response times even faster. You can follow these changes here on the blog or in the API provider monthly newsletter.

A big shout out to all of you for being patient throughout this process! You have helped us identify the issue and swiftly resolve it. A special thank to community members Paolo, Ingo, Pavel and Eric for their assistance in this process!

Iddo Gino,

CEO and Founder, RapidAPI

Leave a Reply