What is Kafka (Apache Kafka)?

Apache Kafka is an open-source distributed event streaming platform

Kafka was developed at LinkedIn in the early 2010s. The software was soon open-sourced, put through the Apache Incubator, and has grown in use. The platform’s website claims that over 80% of Fortune 100 companies use or trust Apache Kafka1.

The “Apache” part of the name is drawn from the Apache Software Foundation. This foundation is a community of developers that work on producing and maintaining open-source software projects.

Furthermore, the platform was bourne out of the deconstruction of monolithic application architectures2. Creating an ecosystem of Producers, Consumers, Topics, Logs, Events, Connectors, Clients, and Servers. All of these terms are actors in the system supporting event streaming.

This article will briefly discuss how Apache Kafka works, its use cases, and relevant terminology. After that, I’ll walk you through how to discover and test Kafka Topics with RapidAPI easily.

How does it work?

There’s a helpful paradigm shift that has to occur when understanding Kafka after working with REST APIs. Kafka is focused on Events, not things3.

Theoretically, during the operations of an application, an Event may occur. That Event contains data describing what happened. Subsequently, the Event is emitted and stored in a Log. The Log is a series of Events ordered by the time they occurred. Each Log is defined as a Topic. Therefore, each Event is defined by its Topic, timestamp, and data4. Finally, other devices can subscribe to the Topic and retrieve data.

This is only part of the picture. Next, we need to discuss Producers and Consumers. Whenever a Client application or device is creating Events it is known as a Producer. Intuitively, applications or devices that subscribe to Topics (a log of events) is a Consumer.

Example

On your phone, you have a stock market application, and you’re following the daily price of Amazon (AZMN). The application on your phone (Consumer) displays the current price for AMZN (the Topic). Your phone is constantly updating the price (reading Events), giving you real-time estimates.

How are you able to get real-time price updates? A stockbroking server emits price changes (Producer), and those changes are logged in a Topic stored in an Apache Kafka server cluster. The mobile application, in this example, reads the Topic and updates the price.

This is a simple example and does not encompass all the possible use cases for Kafka, but it helps visualize the terminology.

Kafka Infrastructure

It’s easy to imagine Apache Kafka working in the abstract. However, what does the technology stack behind the platform look like?

Apache Kafka is deployed as a cluster of servers. Consequently, the servers work together using replication to improve performance and to protect against data loss. Servers, in Kafka, are referred to as Brokers because they work as the intermediary between Producers and Consumers.

Kafka can be deployed with:

- virtual machines

- containers

- on-premises

- in the cloud

Additionally, you might choose to manage the cluster using a service like Kubernetes.

What is Event Streaming?

“[…] event streaming is the practice of capturing data in real-time […] storing these event streams durably for later retrieval; manipulating, processing, and reacting to the event streams […] and routing the event streams to different destination technologies as needed.

Intro to Streaming, Apache Kafka Documentation

Event streaming is the core function of Apache Kafka. The stream is the lifecycle of the event as the relevant data makes its way from creation to retrieval/consumption. Apache Kafka includes a series of APIs accessed through a language-independent protocol to interact with the event streaming process. The five APIs are the:

- Producer API

- Consumer API

- Streams API

- Connect API

- Admin API

Next, we’ll focus on the Streams API.

What is Kafka Streams API?

The Kafka Streams API allows the transforming of data with very low latency. Also, retrieving data from the Streams API can be stateless, stateful, and with a specified time range.

Apache Kafka is only supported using the Java programming language, so you won’t find a client library for other languages that are part of the project. However, client libraries exist for different languages, but they are not supported through the Apache Kafka project. A popular choice for different client libraries is Confluent.

Besides the language constraint, the Streams API is OS and deployment agnostic.

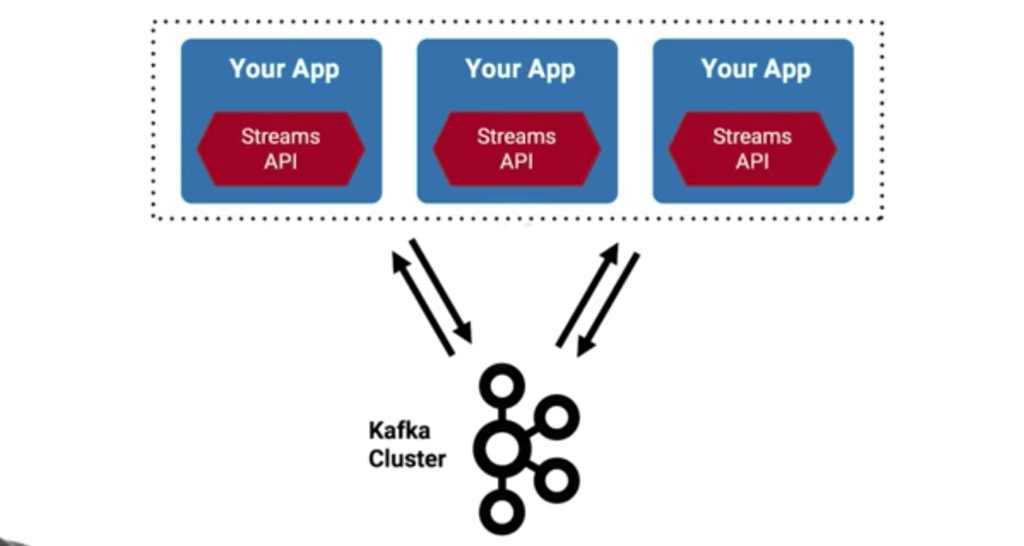

One important point with the Kafka Streams API is that it’s deployed with your Java application. It’s not set up on a different server. The API becomes a dependency in your Java application. This is slightly different than a typical API deployment.

Introducing the Streams API into the conversation often raises a question, what’s the difference between the Consumer API and the Streams API?

What Can I Use It For?

In the previous section, I introduced the Streams API and raised the question: What’s the difference between the Consumer API and the Streams API?

First, let’s reiterate that Topics reside in Kafka’s storage layer, while streams are considered part of the processing layer6. The Consumer API and the Streams API are part of the processing layer.

The difference between the Streams API and the Consumer API is defined by the features of the Streams API. The Streams API supports exactly-once processing semantics, fault-tolerant stateful processing, event-time processing, streams/table processing (more like processing data with a traditional database), interactive queries, and a testing-kit7.

All the aforementioned features would need to be implemented on their own with the Consumer API. Sometimes organizations want the lower-level control. However, for newcomers, the Streams API is a godsend for event stream processing.

Kafka Use Cases

Some of the most common use cases for Apache Kafka are8:

- Messaging

- Activity Tracking

- Metrics

- Log aggregation

- Stream Processing

- Event Sourcing

- Commit Log

In the final part of this article, I’ll discuss how to discover and test Kafka Topics with RapidAPI.

How to Use Kafka Types on RapidAPI

RapidAPI is the first API platform to allow discovery of Kafka clusters and topics, viewing of schemas, and testing/consuming of records from a browser.

Next, let’s test sending and receiving events as part of the event processing stream. This is possible with RapidAPI’s support for Kafka APIs on its marketplace dashboard and through the enterprise hub.

This how-to section does not cover creating an Apache Kafka cluster and setting up topics. Thankfully, RapidAPI has published a Demo Kafka API to help us get started. Therefore, we will use this API in the rest of the article for convenience.

1. Navigate to the Demo Kafka API

Follow this link to the Demo Kafka API on the RapidAPI marketplace.

If you haven’t already, you can create an account on RapidAPI for free to explore, subscribe, and test thousands of APIs.

2. Inspect Dashboard Panels

The dashboard is divided into three panels. For REST APIs, the left side panel lists different routes. However, for Kafka APIs, the left panel lists the available Topics. For the Demo Kafka API, this includes:

- Products

- Transactions

- Page Views

The center panel allows us to interact with the Kafka cluster inputting different values, submitting events, or consuming a real event stream. There are two parent tabs, Consume and Produce.

The Consume tab allows us to connect to an event stream and specify the partition, time offset, and maximum records to retrieve. Additionally, we can inspect the topic schema and configuration. Clicking the View Records button connects us to the stream and starts displaying events. The animation at the top of the right panel informs us that we are subscribed and listening to the Product topic.

Similarly, we can select the Produce tab in the middle panel to add a new event to the stream.

3. Review the Schema and Types For the Products Topic

First, select the Products topic in the left panel.

Then, in the middle panel, click the Produce tab.

There are three sub-tabs in this section:

- Data

- Headers

- Options

The Data tab displays the Key Schema and Value Schema. Also, we have a raw data input box to submit our events based on the defined schema. Here, we can test different Kafka schema types and various Kafka data types.

Next, inspect the schema definition in the middle panel and compare it to the streamed events already in the log.

This will help us in the next step when we connect to the Product stream, create an event, and observe the event added to the stream.

4. Consume and Produce events in the Dashboard

Now, let’s create a real event and simultaneously consume it all in the dashboard!

First, click on the Consume tab in the center panel. You can leave the default selects as they are. Click the View Records button. Events will populate on the right panel.

Next, after you connect to the Products topic, select the Produce tab in the middle panel.

In the Key input field, paste the following JSON code,

{

"category": "harmonica"

}and for the Value input, copy-and-paste the code below.

{

"name": "Hohner",

"productId": "987654321"

}Finally, click the Produce Records button to send this event off into the stream.

The input fields clear, and the event immediately appears in our stream!

If you do not see the new event, double-check that you are still listening to the event stream. You should see the animated dots below the header in the right panel.

Conclusion

Congratulations on producing and consuming your first events with RapidAPI! This article took a quick look at what Apache Kafka is, how it works, and what it can do. Additionally, I gave a brief tutorial on testing and inspecting your Apache Kafka APIs or public event streams with the RapidAPI dashboard. If you’re looking for more, you can read more Apache Kafka on RapidAPI in the docs. Or, check out how to add your Kafka API to RapidAPI.

What is Kafka stream processing?

Event streaming is the practice of capturing data in real-time, storing these event streams durably for later retrieval, manipulating, processing, and reacting to the event streams. And routing the event streams to different destination technologies as needed.

Is Kafka an API?

Kafka is deployed as a cluster of servers. However, the APIs for Kafka are not part of the server deployment. They are deployed as part of your application. Then, your application uses the APIs which communicate with the server cluster.

What is Kafka used for?

Kafka is used for event-driven architectures. The Kafka platform helps collect, store, and make events available for client applications to stream data in real-time.

What are topics in Kafka?

Topics, in Kafka, are a series of events stored in a log that belong to a similar category. Hence, a topic may be a Product or Custom Activity.

What is Kafka in simple words?

Kafka is a series of servers that work together to facilitate event-streaming. This provides computers, phones, and devices the ability to react to data in real-time.

Footnotes

1 “Apache Kafka.” Apache Kafka, kafka.apache.org/.

2 Berglund, Tim. “What Is Apache Kafka®?” Www.youtube.com, youtu.be/FKgi3n-FyNU. Accessed 15 Apr. 2021.

3 See Berglund (2), explains the deconstruction of monolithic applications at the beginning of the video.

4 See Berglund (2), the process explained throughout the video.

5 Berglund, Tim. “1. Intro to Streams | Apache Kafka® Streams API.” Www.youtube.com, youtu.be/Z3JKCLG3VP4?t=538. Accessed 15 Apr. 2021.

6 Noll, Michael. “Streams and Tables in Apache Kafka: Event Processing Fundamentals.” Confluent, www.confluent.io/blog/kafka-streams-tables-part-3-event-processing-fundamentals/. Accessed 15 Apr. 2021.

7 Noll, Michael. “Kafka: Consumer API vs Streams API.” Stack Overflow, stackoverflow.com/a/44041420. Accessed 15 Apr. 2021. Commented answer to a question about the difference between the Consumer API and the Streams API.

8 “Apache Kafka.” Apache Kafka, kafka.apache.org/uses. Accessed 15 Apr. 2021. List of common use cases.

Leave a Reply